In the previous blog, we learned about 4 main deployment patterns (canary deployment, blue/green deployment, rolling deployment, and shadow deployment).

With the gradual maturation of cloud-native technologies, enterprises are seeking more flexible and scalable systems, leading to the widespread adoption of microservice architecture.

While microservices offer numerous advantages, they present new challenges for architects and operations engineers. In a monolithic architecture, application design, deployment, and scaling are treated as a single unit. However, with the adoption of microservices, deploying services built with different languages and frameworks that interact with each other can complicate the deployment process.

As a result, enterprises need to employ various deployment strategies to ensure smooth application deployment, maintain integrity, and achieve optimal performance.

Deployment Patterns

Microservices architectures can adopt different types of deployment patterns, each designed to address various functional and non-functional requirements. Services can be written in various programming languages or frameworks, and even different versions of the same language or framework.

Each microservice comprises several service instances, such as UI, databases, and backend components. Microservices must be independently deployable and scalable, with isolated instances that can be rapidly built, deployed, and used to allocate computing resources effectively. Therefore, the deployment environment must be reliable, and services must be monitored.

Microservices Deployment Patterns

Microservices deployment patterns can be categorized into three main types:

Multiple Service Instances per Host

Single Service Instance per Host

Serverless Deployment

Multiple Service Instances per Host

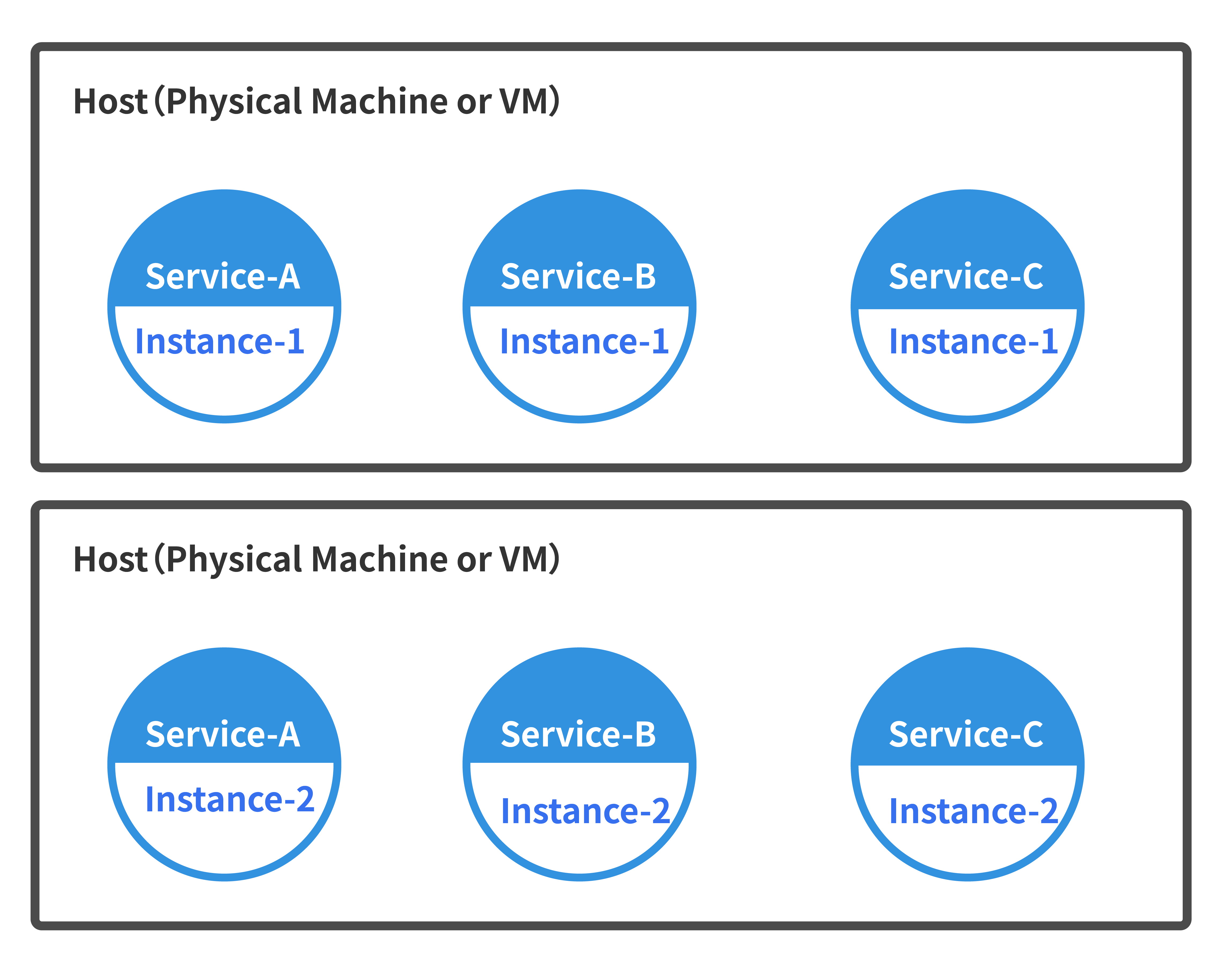

In this pattern, users need to configure one or more physical or virtual hosts and run multiple service instances on each host. This is a traditional application deployment method, with each service instance running on a port on one or more hosts.

Variants of this pattern include deploying each service instance as a process or a process group. For instance, a Java service instance might be deployed as a web application on an Apache Tomcat server. Another variant involves running multiple service instances within the same process or process group. For example, multiple Java web applications on the same Apache Tomcat server or running multiple OSGI bundles in the same OSGI container.

One of the main advantages of running multiple service instances on a single host is efficient resource utilization, where multiple service instances share a server and its operating system. This pattern also allows for the automation of starting and stopping processes through scripts, with configurations containing deployment-related information such as version numbers.

Single Service Instance per Host

In many cases, microservices require their own space and a separate deployment environment to avoid conflicts with other services or instances. In such scenarios, where services cannot share a deployment environment due to potential resource conflicts, language clashes, and framework clashes, a service instance can only be deployed on its dedicated host. This host can be either a physical or a virtual machine.

While this ensures no conflicts with other services and that the service remains entirely isolated, it comes at the cost of increased resource consumption.

Single Service Instance per VM

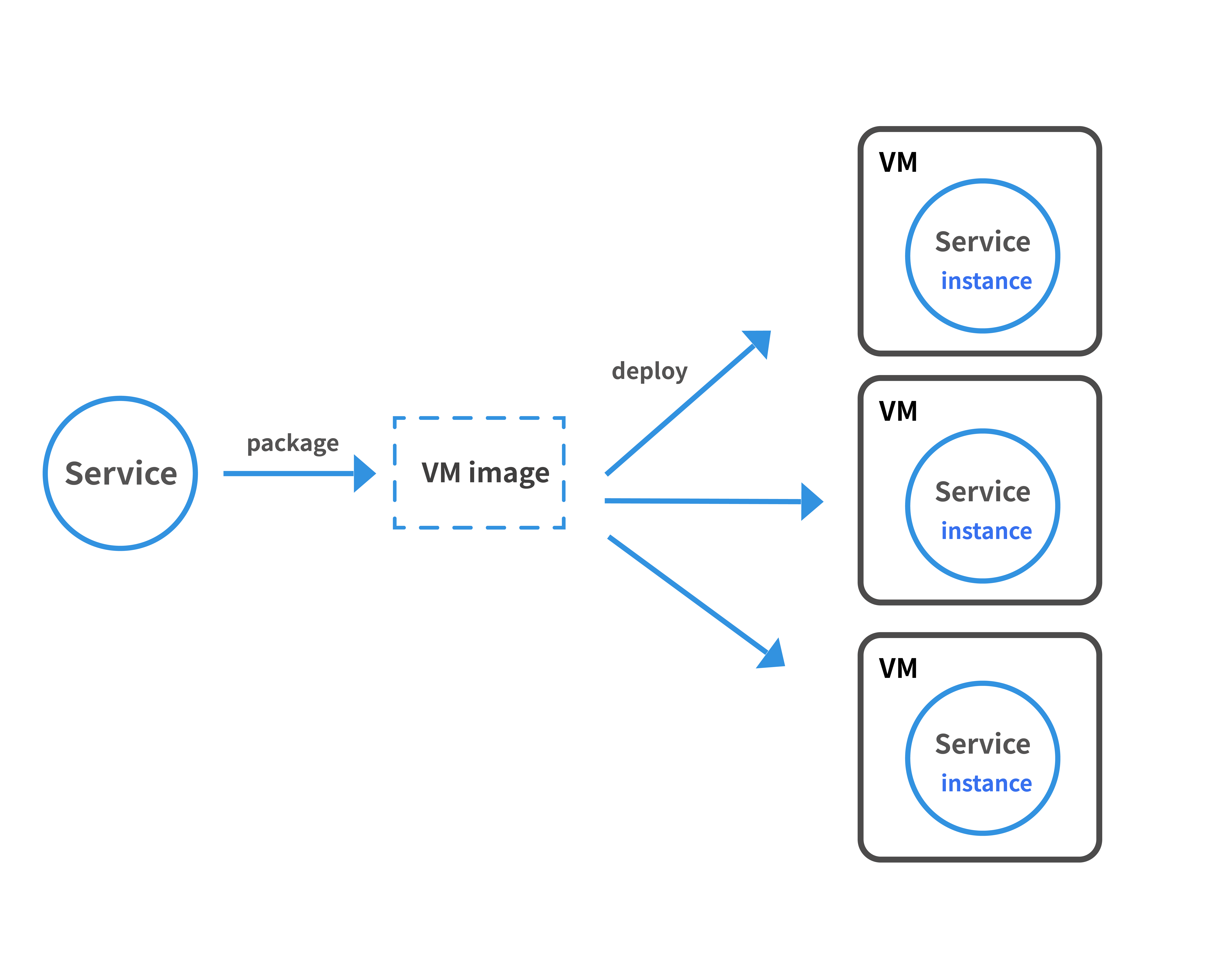

The architecture of microservices demands robustness, quick start-stop capabilities, and the ability to rapidly scale up and down. To achieve this without sharing any resources with other services or encountering conflicts, each microservice can be packaged as a virtual machine (VM) image, with each service instance being a virtual machine.

Developers can easily scale services by increasing the number of service instances. This deployment pattern enables each service instance to have its dedicated resources, and it can be closely monitored.

The main advantage of this pattern is the isolation of each service instance. However, a significant drawback is the resource consumption and the time required to build and manage virtual machines.

Single Service Instance per Container

This deployment pattern combines the benefits of virtual machines with lighter resource usage and increased efficiency. Each microservice instance runs in its own container, making it an ideal environment for microservices as it doesn't consume excessive memory or CPU. It utilizes Docker container runtimes and supports running multiple instances of microservices within a single container.

This allows for efficient resource usage, rapid application startup, and scaling based on demand, reducing unnecessary expenses. It supports easy creation and management of containers, simplifying scalability and deployment.

While this approach offers advantages, it requires manual updates of containers to leverage new features and bug fixes. If multiple instances of a microservice run in a single container, updating them collectively can be time-consuming and error-prone. Additionally, container technology, while rapidly evolving, may not be as mature and secure as virtual machines, as containers share the operating system kernel.

Serverless Deployment

In certain situations, microservices may not require knowledge of the underlying deployment infrastructure. In such cases, deploying services can be outsourced to third-party vendors, often cloud service providers. Enterprises are indifferent to the underlying resources, focusing only on running microservices on a platform. Costs are incurred based on the resources the service provider allocates for each service invocation, with the provider selecting and executing code for each request in a sandboxed environment, whether it be a container, virtual machine, or other.

Service providers handle configuration, scaling, load balancing, patching, and ensuring the security of the underlying infrastructure. Popular serverless deployment platforms include AWS Lambda and Google Functions.

Serverless deployment platforms offer highly elastic infrastructure, automatically scaling services to handle loads. This saves time spent managing low-level infrastructure and reduces expenses, as providers bill only for resources consumed during each service invocation.

Conclusion

Microservices deployment patterns and products are continuously evolving, with the possibility of more deployment patterns emerging in the future. Many of the mentioned are currently popular and widely used by most microservices providers, proving to be successful and reliable. However, as technology progresses, the industry is exploring innovative solutions. In future articles, we will delve into ensuring the security of application deployment, so stay tuned!