Application deployment is the process of delivering software to users, typically involving steps like configuring the environment, installation, and testing.

Nowadays, most enterprises automate at least some of these steps when deploying new applications. The deployment strategy significantly impacts the performance, stability, and speed of an application. Therefore, testing with a small subset of users before rolling out updates to everyone is sometimes done.

Modern standards for software development and user experience require developers to continuously update their projects. Deployment and integration have become routine operations—modern applications require deployment every day. That's why having an effective deployment technique is more crucial than ever.

Currently, businesses are pursuing more flexible and scalable systems, leading to the widespread adoption of microservice architecture and cloud infrastructure. Flexible architectures involve multiple development teams, posing challenges to deployment.

This article will introduce several key stages of the application deployment process and different application deployment patterns.

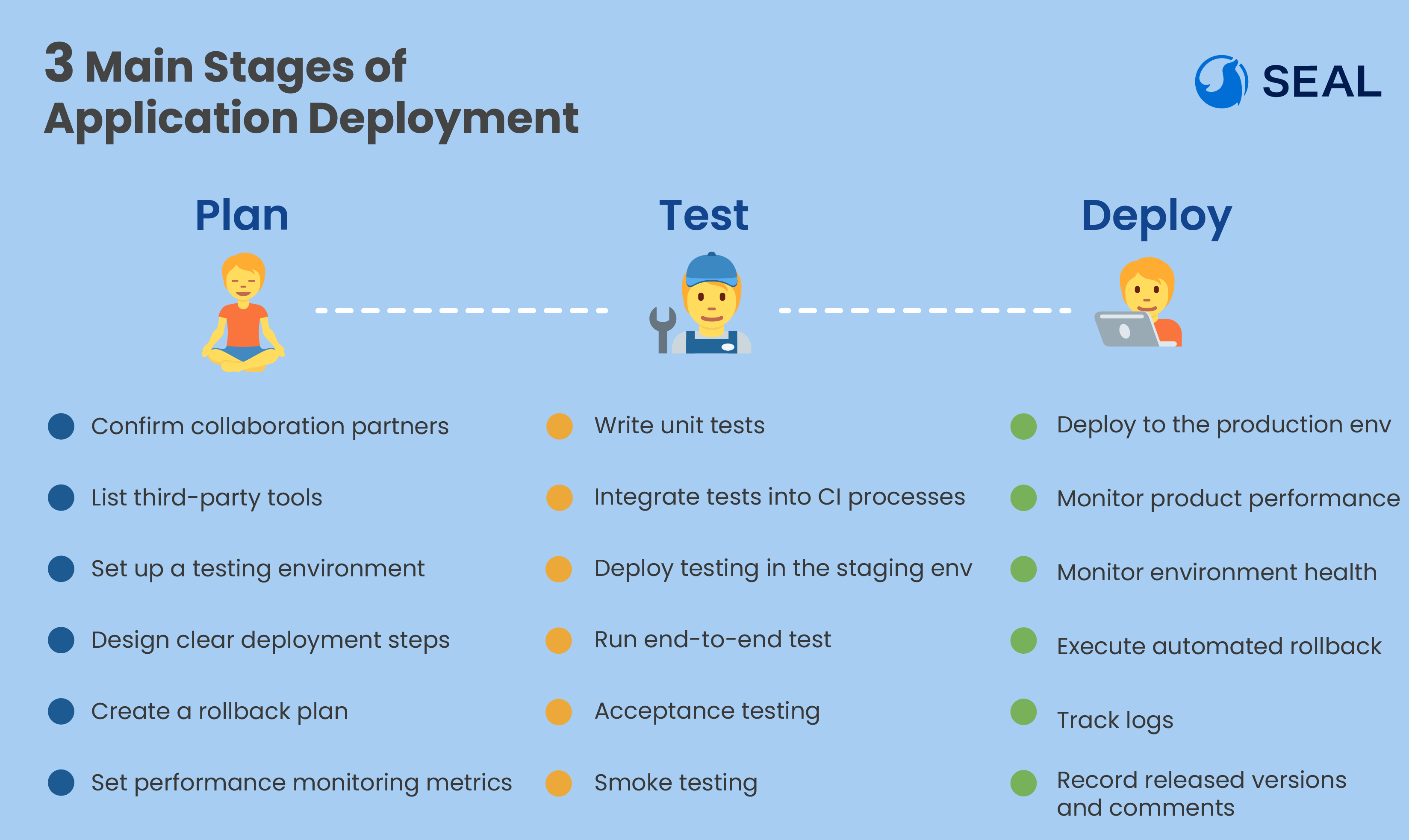

Application Deployment Stages

Early Preparation/Planning Stage

In the planning and preparation stage, the following steps are crucial:

Confirm collaboration partners: Inform all teams involved in the software development process before deployment to minimize friction between development, operations, and security teams.

List third-party tools: Identify all tools required in the deployment process to ensure everyone knows how to use them effectively, minimizing potential issues.

Set up a testing environment: Test the software before the official release to ensure its reliability.

Design clear deployment steps: Communicate closely with the team to ensure a clear and straightforward deployment process.

Create a rollback plan: Enable a plan in case severe issues arise with the new version. Progressive delivery strategies make seamless automatic rollback possible.

Set performance monitoring metrics: Common metrics include memory usage, CPU usage, and query response time. These metrics, along with custom KPIs, measure the effectiveness of the deployment. In progressive delivery, they can even be used to automatically determine deployment success.

Testing Stage

The testing stage validates the reliability of the software before deployment. Consider the following aspects:

Write unit tests: Test a specific part of the software to verify its independent behavior. Unit tests pass when the results align with requirements; otherwise, they fail.

Integrate tests into CI processes: Integrate unit tests into a shared code repository for automated building and validation of each part. Completing this step before deployment makes bug fixing and removal easier.

Deploy testing in the staging environment: Simulate the production environment and use it to test updates, code, and other aspects to ensure the software runs as expected.

Run end-to-end tests: Test the entire workflow of the application, running through all possible operations, and checking if it works seamlessly with other components (such as network connections and hardware).

Acceptance testing: The final step in the testing process involves validating the software with relevant stakeholders or real users. Their feedback helps determine if the software is production-ready.

Smoke testing: Create a dedicated test suite to run in production after deployment to verify that the just-released software has no defects.

Deployment and Release Stage

The final stage of application deployment includes the following aspects:

Deploy to the production environment: Push updates to the production environment for users to use.

Monitor product performance: Monitor product performance based on KPIs, checking HTTP errors, and database performance, among other metrics.

Monitor environment health: Use monitoring tools to identify potential issues related to the software environment, such as the operating system, database system, and compiler.

Execute automated rollback: Measure the success of the release using smoke tests and metrics, automatically rolling back to the previous version in case of issues.

Track logs: Gain visibility into the software's operation using logs, understand how it runs on the infrastructure, investigate errors, and identify potential security risks.

Record released versions and comments: Save copies of new versions created during product modifications, helping maintain consistency.

Application Deployment Patterns

Canary Release

Canary release allows for the incremental release of new features rather than complete updates. Developers keep the old version active, comparing performance before and after the update. Canary releases initially offer new features to a small subset of users, adjusting the product based on user feedback.

The basic steps of a Canary release:

Developers upload the new version (assuming it's Version B) to the server.

The main user group remains directed to Version A, while a small subset starts using Version B. Developers monitor the performance of the new version and make improvements as needed.

After necessary adjustments to Version B, developers redirect most traffic to the updated instance. The team then measures performance and compares it with the old version.

When Version B is stable enough, developers cut off Version A and completely redirect traffic to the updated codebase.

To ensure the success of Canary releases, developers must set clear performance metrics. The original version and the Canary version (updated version) should deploy under the same conditions for objective analysis.

One advantage of Canary releases is minimizing downtime and avoiding potential issues in the new version. Testing features with a small user base allows developers to discover and fix issues before affecting a larger user base, ensuring new features or updates don't negatively impact the user experience.

However, this deployment pattern has its drawbacks—it is time-consuming. Canary testing and deployment occur in multiple phases, requiring time for thorough monitoring and evaluation. Therefore, not all users can immediately benefit from new features and upgrades, as in many strategies running two versions simultaneously, developers need to ensure compatibility with the technology stack and databases.

Blue/Green Deployment

Blue/Green deployment involves simultaneously deploying two application versions—current (Blue) and new (Green)—running in different environments, with only one version live at a time. While the Green version is being tested, the Blue version continues running, and vice versa. Both versions can be active, but only one is public (usually the Blue version).

Once testing is complete and the new deployment is ready, traffic can safely switch to the Green version. Afterward, the Blue environment can be retained for rollback operations or used for future updates.

Blue/Green deployment almost eliminates downtime and allows for immediate rollback. The isolation of Blue/Green environments protects the live deployment from bugs during the testing phase. Although this deployment strategy has lower risks, its implementation costs are higher, as it requires operating two environments, increasing costs in storage space, computing power, hardware, etc. Seamless switching between Blue and Green environments also requires ensuring compatibility with data formats and storage.

Rolling Deployment

Using rolling deployment, developers can upload several versions simultaneously—the number of active versions is known as the window size. Developers can upload one instance at a time (setting the window size to 1) or synchronize updates across the cluster to deploy the application. To deploy faster and safer, developers often use container technologies. Container application deployment includes Docker and Kubernetes, common tools for isolating updated versions, enabling and disabling services, and tracking changes.

Rolling deployment provides flexibility and reduces downtime because it redirects traffic to the new version only after it's ready. Additionally, it lowers deployment risk, as defects in the update only affect a limited number of users. However, this deployment method has a slower rollback speed since it requires a gradual approach.

Moreover, new deployments must be backward compatible, as they need to coexist with the old version. If the application requires continuous sessions, ensuring load balancing supports sticky sessions is crucial.

Shadow Deployment

Shadow deployment involves two active parallel versions, where incoming requests are forked to the current version and sent to the new version. This method helps test how new features handle production loads without impacting traffic. Once the new version meets performance and stability requirements, the application can be safely launched.

While this approach is highly professional and complex to set up, it eliminates the impact on production by utilizing traffic replication to test bugs using shadow data. Test results are highly accurate as they use production loads to create conditions that simulate reality. Shadow deployment is considered a low-risk method and is often combined with other approaches, such as Canary deployment.

Steps to execute shadow deployment:

Implementation at the Application Level:

Developers write functions that can send input to both the current and new versions of the application. Input and output can be processed simultaneously or asynchronously in a queue for higher accuracy. Teams can choose to segment input on the client side, setting different API targets for browsers or mobile devices.

Implementation at the Infrastructure Level:

Developers configure load balancing in fork format and support endpoints for both versions. Developers need to ensure there is no duplication, and the application must not request the same data twice. The new version should receive user information from the first version; otherwise, users will have to re-enter payment or registration data.

Assessment of Shadow Mode Results:

Teams need to pay attention to data discrepancies, compare the performance of the two versions, and check whether the new version can correctly receive input from the old version.

Summary

This article briefly introduces the main stages of application deployment and common patterns. In future articles, we will explore application deployment in microservices architecture and discuss securing application deployment and best practices. Stay tuned!